Memoization using Closures

I wrote a little article about how to use closures in Python a couple weeks ago, but I’m not convinced that I really gave a good motivation for why we might want to use them. The example I gave was a little bit simplistic—here’s a better one.

First, we need to talk about memoization (if you’re familiar with memoization already, feel free to skip along a bit). Suppose we want to write a function to calculate the nth Fibonacci number. We might first write a naïve recursive function:

def fib(n):

if n < 2:

return n

else:

return fib(n - 1) + fib(n - 2)

This does the job (assuming we give it appropriate input), but it’s actually

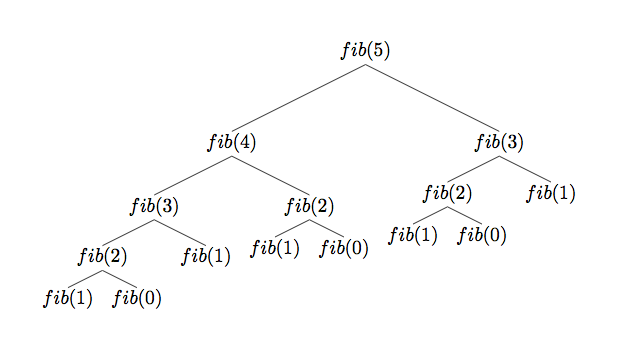

terribly inefficient. Check out the call tree for fib(5):

We’ve got a few redundant calls here (notably fib(2) and fib(3)), and for

higher values of n they really start adding up. As it turns out, this

Fibonacci implementation is O(2n). Our elegantly simple algorithm is

awful, just awful.

But we can salvage it! If we were to record our calculated values (by closing over our already-calculated values, say) we could make this thing phenomenally more efficient.

def memoized_fib(n):

values = [0, 1]

def fib_helper(k):

if len(values) > k:

result = values[k]

else:

result = fib_helper(k - 1) + fib_helper(k - 2)

values.append(result)

return result

return fib_helper(n)

We’re creating a list called values, for which each entry contains the

Fibonacci number associated with its index, starting with the first two. After

we’ve recursed all the way down the first time, we build up the list as we pop

functions off the stack, so subsequent calls to fib_helper don’t really need

to do any recursion at all—they can just look up the appropriate value in

values. The list values is hidden inside a closure, so it’s protected from

the outside world. We certainly could have implemented memoization using a

global variable, but why would we if we we don’t have to? Our closure provides

an equally efficient and more elegant solution to the problem.

Just to drive home the efficiency gain, memoized_fib(500) takes 0.014s to run

on my machine; fib(500) is still calculating an hour later. Which makes sense,

since we’ve replaced an exponential algorithm with a linear one. Feels good.

And, yes, it’s totally true that we could have just calculated the Fibonacci numbers iteratively and avoided the whole fiasco. But what would we have learned?

You might like these textually similar articles: